Hyperparameters

Hyperparameters are parameters that have to be manually decided by the users.

- Learning rate

- Regularisation term

- Momentum coefficient

- Learning rate decay

- Number of layers and sizes of neural network

- Which initialiser to use

- Which activation functions to use

Hyperparameter searching

According to No free lunch theorem there is no closed-form formula to determine the best value for each hyperparameter. So we have to manually search:

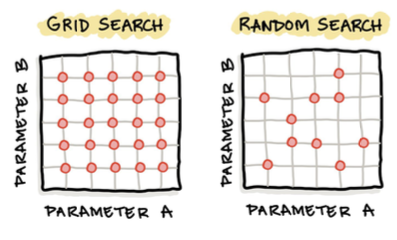

Grid search

By dividing the domain of possible values for each hyperparameter in a discrete grid, we try every combination of values and calculate certain performance metrics

Random search

We define a search space as a bounded domain of hyperparameter values and randomly sample points, keeping the best after trying a certain number of times.

Evaluating the searches

Grid search: Performs better on small spaces

Random search: Better on large domains, no guarantee of returning a good configuration