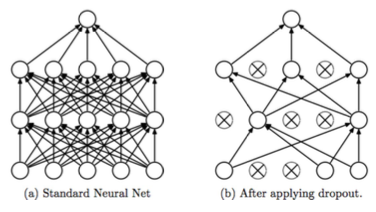

Dropout layer

Regularisation technique to prevent overfitting, by randomly dropping out (setting to zero) some neurons in Neural Networks during each iteration

It is typically inserted:

-

after a linear or convolutional layer

-

before/after a non-linear activation function.

-

& In PyTorch,

nn.Dropouttakes the argument, probability of an element dropping out

As dropout layers are not trainable,

Since different subsets of neurons are trained at different times, dropout can be thought of as training multiple smaller networks that are later combined.

The network learns to distribute information across multiple neurons rather than relying on a few dominant ones.

Differences in training and evaluation mode

train(): Randomly drops out a specified portion of neurons

eval(): Does not drop out any neurons, passing all activations from previous to next layer