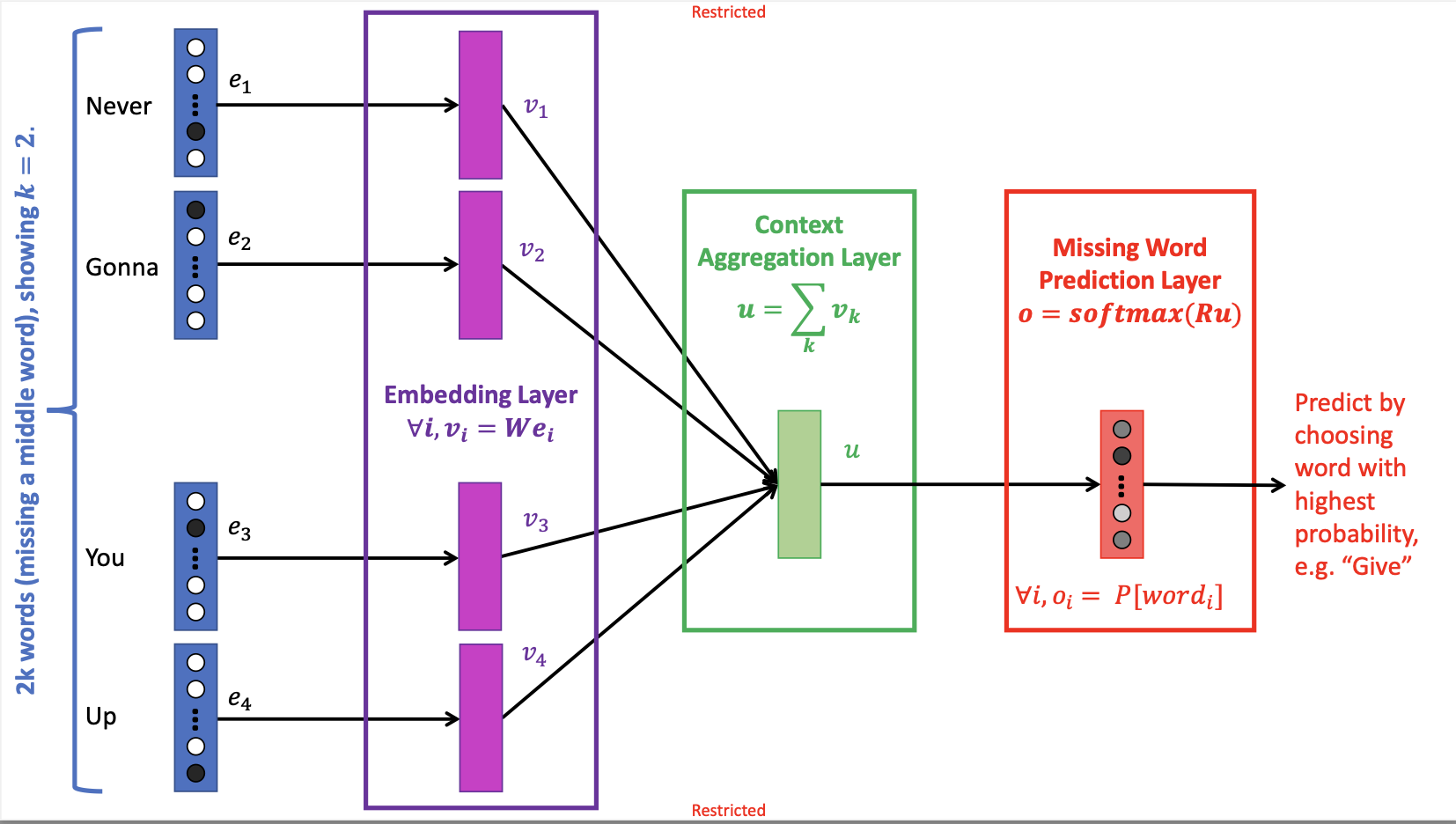

Continuous Bag of Words (CBoW)

Predicts a middle word giving surrounding context words, i.e. predict word with in the middle with index

Use a sliding window with size

Input: Start with one-hot vectors

Embedding Layer:

- Add a shared linear transformation

of size applied to each input one-hot vector . - We get a dense vector

of size , the size of the new word embedding, often chosen as - This layer learns the word embeddings.

Context Aggregation Layer:

- Sums all vectors

, which combines the contextual meaning vectors together - Non-trainable layer, which produces

- Roughly encapsulates the meaning of the entire incomplete sentence of

input words

Prediction Layer:

- Final linear layer (matrix

, size ) followed by a softmax function - Produces an output

with so that - Predicts probability distribution over the entire vocabulary

for the missing word

Training:

- Use a sliding window over a large text corpus to generate (context words, middle word) pairs

- Classification loss is preferably a negative logarithm: $$L_t = -\log(o_t = e_t|e_{-t})$$ where